Friday, December 30, 2016

Wednesday, December 28, 2016

Saturday, December 24, 2016

Fwd: BitTorrent Live’s “cable-killer” P2P video app finally hits iOS | TechCrunch

---------- Forwarded message ----------

From: J M

https://techcrunch.com/2016/

Thursday, December 22, 2016

The OpenMV project - OpenMV Cam M7

The OpenMV Cam M7 is powered by the 216 MHz ARM Cortex M7 processor which can execute up to 2 instructions per clock

512KB of RAM enabling 640x480 grayscale images / video (up to 320x240 for RGB565 still)

MicroPython has 64KB more heap space (~100KB total) with the OpenMV Cam M7 so you can do more in MicroPython now

$65 retail ($55 to pre-order)

Monday, December 12, 2016

Fwd: live "gov" webcams on oahu

From: WW

Date: Mon, Dec 12, 2016 at 9:10 AM

Subject: live "gov" webcams on oahu

To: John Sokol

Sunday, December 11, 2016

SYNQ - Video API built for developers

The Synq FM is a cloud based Video API

- Simple video upload and storage

- Transcoding into various formats for a variety of platforms

- A customizable, embedded player

- Attaching custom video metadata (like tags, groups, playlists, and so on)

- Webhook notifications for various events

- Geo-local content delivery

Automatically switch between several Content Delivery Networks (CDNs) to increase performance and improve user experience.

Thursday, December 08, 2016

Magic Leap is actually way behind, like we always suspected it was

Magic Leap’s allegedly revolutionary augmented reality technology may in fact be years away from completion and, as it stands now, is noticeably inferior to Microsoft’s HoloLens headset, according to a report from The Information. The report, which incorporates an interview with Magic Leap CEO Rony Abovitz, reveals that the company posted a misleading product demo last year showcasing its technology. The company has also had trouble miniaturizing its AR technology from a bulky helmet-sized device into a pair of everyday glasses, as Abovitz has repeatedly claimed the finished product will accomplish.

YES MORE BULLSHITTERS, Meanwhile I can't get my several AR startups I'm advising any money because guy like this sucked it all up.

Monday, December 05, 2016

Tuesday, November 29, 2016

MIT Creates AI Able to See Two Seconds Into the Future

http://www.dailygalaxy.com/my_weblog/2016/11/mit-creates-ai-that-is-able-to-see-two-seconds-into-the-future-on-monday-the-massachusetts-institute-of-technology-announce.html

Massachusetts Institute of Technology announced its new artificial intelligence. Based on a photograph alone, it can predict what’ll happen next, then generate a one-and-a-half second video clip depicting that possible future.

When we see two people meet, we can often predict what happens next: a handshake, a hug, or maybe even a kiss. Our ability to anticipate actions is thanks to intuitions born out of a lifetime of experiences.

Machines, on the other hand, have trouble making use of complex knowledge like that. Computer systems that predict actions would open up new possibilities ranging from robots that can better navigate human environments, to emergency response systems that predict falls, to Google Glass-style headsets that feed you suggestions for what to do in different situations.

This week researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have made an important new breakthrough in predictive vision, developing an algorithm that can anticipate interactions more accurately than ever before.

http://web.mit.edu/vondrick/tinyvideo/paper.pdf

http://web.mit.edu/vondrick/tinyvideo/

Generating Videos with Scene Dynamics

MIT Hamed Pirsiavash

University of Maryland Baltimore County Antonio Torralba

MIT

NIPS 2016

Monday, November 28, 2016

Build hardware synchronized 360 VR camera with YI 4K action cameras

http://open.yitechnology.com/vrcamera.html

http://www.yijump.com/

YI 4K Action Camera is your perfect pick for building a VR camera. The camera boasts high resolution image detail powered by amazing video capturing and encoding capabilities, long battery life and camera geometry. This is what makes us stand out and how we are recognized and chosen as a partner by Google for its next version VR Camera, Google Jump - www.yijump.com

There are a number of ways to build a VR camera with YI 4K Action Cameras. The difference being mainly how you control multiple cameras to start and stop recordings. In general, we would like all cameras to start and stop recording synchronously so you can easily record and stitch your virtual reality video.

The easiest solution is to manually control the cameras one-by-one. It is convenient and quick however it doesn’t guarantee synchronized recording.

A better solution therefore is to make good use of Wi-Fi where all cameras are set to work in Wi-Fi mode and are connected to a smartphone hotspot or a Wi-Fi router. Once setup is done, you should be able to control all cameras with smartphone app through Wi-Fi. For details, please check out https://github.com/YITechnology/YIOpenAPI

Please note that this solution also comes with its limitations. For instance, when there are way too many cameras or Wi-Fi interference happens to be serious, controlling the cameras via smartphone app can sometimes fail. Also, synchronized video capturing is not guaranteed since Wi-Fi is not a real-time communication protocol.

You can also control all cameras with a Bluetooth-connected remote control. The limitations however are similar to that with Wi-F solution.

There are also solutions which try to synchronize video files offline after recording is finished. It is normally done by detecting the same audio signal or video motion in the video files and aligning them. Since this kind of the solutions do not control the recording start time, its synchronization error is at least 1 frame.

HARDWARE SYNCHRONIZED RECORDING

In this article, we will introduce a solution to solve synchronization problem using hardware. We do this by connecting all cameras using Multi Endpoint cable where recording start and stop commands are transmitted in real-time among the cameras, in turn, creating a high-resolution and synchronized virtual reality video.

Sunday, November 27, 2016

Saturday, November 26, 2016

Breathing Life into Shape (SIGGRAPH 2014)

This has serious implications in security, interrogation, marketing, health and medical.

Soon high res 3D Depth cameras will be cheap and common place.

Friday, November 25, 2016

LOW-COST VIDEO STREAMING WITH A WEBCAM AND RASPBERRY PI

http://videos.cctvcamerapros.com/raspberry-pi/ip-camera-raspberry-pi-youtube-live-video-streaming-server.html

Spoiler Alert, Basically they use the Raspberry Pi to connect to the CCTV IP camera over RTSP and then send a live stream up to youtube. Up, out though your firewall and NAT to youtube or any service that will accept RTMP.

Most of this is a good NOVICE GUIDE to setting up and configuring the Pi and buying a IP camera from them.

#!/bin/bash

SERVICE="ffmpeg"

RTSP_URL="rtsp://192.168.0.119:554/video.pro1"

YOUTUBE_URL="rtmp://a.rtmp.youtube.com/live2"

YOUTUBE_KEY="dn7v-5g6p-1d3w-c3da"

COMMAND="sudo ffmpeg -f lavfi -i anullsrc -rtsp_transport tcp -i ${RTSP_URL} -tune zerolatency -vcodec libx264 -t 12:00:00 -pix_fmt + -c:v copy -c:a aac -strict experimental -f flv ${YOUTUBE_URL}/${YOUTUBE_KEY}"

if sudo /usr/bin/pgrep $SERVICE > /dev/null

then

echo "${SERVICE} is already running."

else

echo "${SERVICE} is NOT running! Starting now..."

$COMMAND

fi

They have a bunch of neat Pi Video projects on their web site.

http://videos.cctvcamerapros.com/raspberry-pi

Wednesday, November 23, 2016

Chronos 1.4 high-speed camera up to 21,600fps

Chronos 1.4 is a purpose-designed, professional high-speed camera in the palm of your hand. With a 1.4 gigapixel-per-second throughput, you can capture stunning high-speed video at up to 1280x1024 resolution. Frame rate ranges from 1,057fps at full resolution, up to 21,600fps at minimum resolution.

Features and specs

See the full specs in the Chronos 1.4 Datasheet- 1280x1024 1057fps CMOS image sensor with 1.4Gpx/s throughput

- Higher frame rates at lower resolution (see table below)

- Sensor dimensions 8.45 x 6.76mm, 6.6um pixel pitch

- Global shutter - no “jello” effect during high-motion scenes

- Electronic shutter from 1/fps down to 2us (1/500,000 s)

- CS and C mount lens support

- Focus peaking (focus assist) and zebra exposure indicator

- ISO 320-5120 (Color), 740-11840 (Monochrome) sensitivity

- 5" 800x480 touchscreen (multitouch, capacitive)

- Machined aluminum case

- Record time 4s (8GB) or 8s (16GB)

- Continuous operation on AC adapter (17-22V 40W)

- 1.75h runtime on user-replaceable EN-EL4a battery

- Gigabit ethernet remote control and video download*

- Audio IO and internal microphone*

- HDMI video output*

- Two channel 1Msa/s waveform capture*

- Storage: SD card, two USB host ports (flash drives/hard drives), eSATA 3G

- Trigger: TTL, switch closure, image change*, sound*, accelerometer*

- Low-noise variable-speed fan - camera can run indefinitely without overheating

Monday, November 21, 2016

Sunday, November 20, 2016

Wednesday, October 26, 2016

Wednesday, August 31, 2016

Fwd: Low-cost, Low-power Neural Networks; Real-time Object Detection and Classification; More

From: Embedded Vision Insights from the Embedded Vision Alliance <newsletter@embeddedvisioninsights.com>

Date: Tue, Aug 30, 2016 at 7:36 AM

Subject: Low-cost, Low-power Neural Networks; Real-time Object Detection and Classification; More

|  |

| VOL. 6, NO. 17 | A NEWSLETTER FROM THE EMBEDDED VISION ALLIANCE | Late August 2016 |

| To view this newsletter online, please click here |

| FEATURED VIDEOS |

| "Tailoring Convolutional Neural Networks for Low-Cost, Low-Power Implementation," a Presentation from Synopsys "An Augmented Navigation Platform: The Convergence of ADAS and Navigation," a Presentation from Harman |

| FEATURED ARTICLES |

| A Design Approach for Real Time Classifiers Pokemon Go-es to Show the Power of AR |

| FEATURED NEWS |

| Intel Announces Tools for RealSense Technology Development Auviz Systems Announces Video Content Analysis Platform for FPGAs Sighthound Joins the Embedded Vision Alliance Qualcomm Helps Make Your Mobile Devices Smarter with New Snapdragon Machine Learning Software Development Kit Basler Addressing the Industry´s Hot Topics at VISION Stuttgart |

| UPCOMING INDUSTRY EVENTS |

| ARC Processor Summit: September 13, 2016, Santa Clara, California Deep Learning for Vision Using CNNs and Caffe: A Hands-on Tutorial: September 22, 2016, Cambridge, Massachusetts IEEE International Conference on Image Processing (ICIP): September 25-28, 2016, Phoenix, Arizona SoftKinetic DepthSense Workshop: September 26-27, 2016, San Jose, California Sensors Midwest (use code EVA for a free Expo pass): September 27-28, 2016, Rosemont, Illinois Embedded Vision Summit: May 1-3, 2017, Santa Clara, California |

|

Sunday, July 31, 2016

Thursday, July 21, 2016

Fwd: Jovisin 3.0MP Start light Test report

From: Lulu Wang <us@jovision.com>

Date: Thu, Jul 21, 2016 at 8:40 AM

Subject: Jovisin 3.0MP Start light Test report

We now have 3.0MP/4.0 MP Start light IP cameras. Please see the followed pictures

First class start light R&D by ourslves.

Lulu Wang

Jovision Technology Co., Ltd

Address:12th Floor,No.3 Building, Aosheng Square,No.1166 Xinluo Street, Jinan, China ZIP: 250101

Website: http://en.jovision.com Tel: 0086-0531-55691778-8668

E-mail: us@jovision.com Skype:lulu-jovision

Tuesday, July 19, 2016

Wednesday, June 15, 2016

Thursday, June 09, 2016

Saturday, June 04, 2016

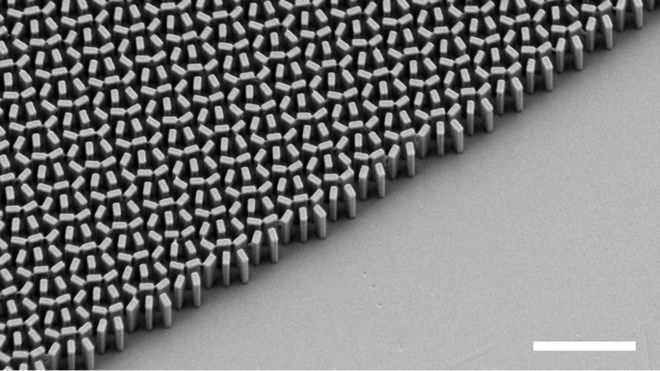

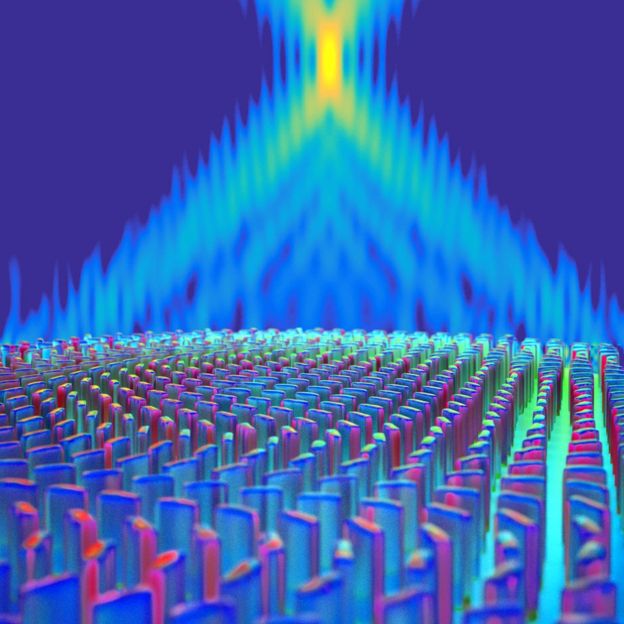

Flat lens promises possible revolution in optics

Image copyrightFEDERICO CAPASSO

Image copyrightFEDERICO CAPASSO Image copyrightFEDERICO CAPASSO

Image copyrightFEDERICO CAPASSO Image copyrightPETER ALLEN/HARVARD

Image copyrightPETER ALLEN/HARVARDWednesday, May 25, 2016

Behringer U-Control UCA202 | Sweetwater.com

Portable USB Interface

- 2 In/2 Out USB Audio Interface

- Mac or PC, no drivers required

- 16-bit/48kHz converters

- Low latency

- Headphone output

- Optical out

- USB powered

- Free DAW download

Tech Specs

| Computer Connectivity | USB 2.0 |

|---|---|

| Form Factor | Desktop |

| Simultaneous I/O | 2 x 2 |

| A/D Resolution | 16-bit/48kHz |

| Analog Inputs | 2 x RCA |

| Analog Outputs | 2 x RCA |

| Digital Outputs | 1 x Optical |

| Bus Powered | Yes |

| Depth | 0.87" |

| Width | 3.46" |

| Height | 2.36" |

| Weight | 0.26 lbs. |

| Manufacturer Part Number | UCA202 |

Monday, April 25, 2016

Sunday, April 24, 2016

Wednesday, April 20, 2016

Tuesday, April 19, 2016

Tuesday, April 12, 2016

Wednesday, April 06, 2016

Fwd: Got what it takes to win an Auggie?

---------- Forwarded message ----------

From: Augmented World Expo <info@augmentedreality.org>

Date: Wednesday, April 6, 2016

Subject: Got what it takes to win an Auggie?

To: sokol@videotechnology.com

|