Sunday, November 30, 2014

Saturday, November 29, 2014

Friday, November 28, 2014

Infrared thermal Imaging Hack-a-thon

FLIR ONE Hack-a-thon:

Infrared thermal Imaging Camera for your Smartphone

At the HackerDojo

Start: Friday, December 12 2014 at 1:00pm

End: Sunday, December 14 2014 at 9:00am

Contact:

Carlos.Uranga@Hackerdojo.Com; Interim Assistance: Anil.Reddy@Hackerdojo.Com, Jaun.Alvarez@Hackerdojo.Com

URL: http://goo.gl/Q4ixKX

Fee: Free for first 50 participants, $15 thereafter per person. Click on the link above to reserve your ticket now!

Details:

Join FLIR and HackerDojo in a 38-hour Hack-a-thon to develop cool and interesting iOS apps for the FLIR ONE!

Cash, products, and promotional prizes for best apps in four categories.

Event Agenda

Friday 12/12

4:00 p.m.- 6:00 p.m. - FLIR ONE Hack-A-Thon Event Kickoff and Keynotes

6:00 p.m.- 12:00 a.m. - FLIR ONE Hack-A-Thon with Hourly Prize Drawings*

*Must be present to win

Saturday 12/13

12:00 a.m.- 12:00 a.m. - FLIR ONE Hack-A-Thon Continues with Hourly Prize Drawings

Sunday 12/14

12:00 a.m.- 8:00 a.m. - FLIR ONE Hack-A-Thon Continues

8:00 a.m.- 12:00 p.m. - Developer Presentations and Demonstrations

12:00 p.m.- 1:00 p.m. - Catered Lunch and Judging

1:00 p.m. - Award Ceremony

Best New App Prizes

1st - $5000 +FLIR ONEs for the entire team + FLIR FX

2nd - $2000 + FLIR ONEs for the entire team + Flir FX

3rd - $1000 + FLIR ONEs for the entire team

4th - $500 + FLIR ONEs for the entire team

5th - FLIR ONEs for the entire team

Additional prizes: Best app in each category - $1000 + FLIR ONEs

App categories: Work, Home, Play, Games/Entertainment

Cash, products, and promotional prizes for best apps in four categories.

Event Agenda

Friday 12/12

4:00 p.m.- 6:00 p.m. - FLIR ONE Hack-A-Thon Event Kickoff and Keynotes

6:00 p.m.- 12:00 a.m. - FLIR ONE Hack-A-Thon with Hourly Prize Drawings*

*Must be present to win

Saturday 12/13

12:00 a.m.- 12:00 a.m. - FLIR ONE Hack-A-Thon Continues with Hourly Prize Drawings

Sunday 12/14

12:00 a.m.- 8:00 a.m. - FLIR ONE Hack-A-Thon Continues

8:00 a.m.- 12:00 p.m. - Developer Presentations and Demonstrations

12:00 p.m.- 1:00 p.m. - Catered Lunch and Judging

1:00 p.m. - Award Ceremony

Best New App Prizes

1st - $5000 +FLIR ONEs for the entire team + FLIR FX

2nd - $2000 + FLIR ONEs for the entire team + Flir FX

3rd - $1000 + FLIR ONEs for the entire team

4th - $500 + FLIR ONEs for the entire team

5th - FLIR ONEs for the entire team

Additional prizes: Best app in each category - $1000 + FLIR ONEs

App categories: Work, Home, Play, Games/Entertainment

Notes:

Ideally teams of 1-4, hacking the future!

Check back weekly for further updates.

Check back weekly for further updates.

Thursday, November 27, 2014

Wednesday, November 26, 2014

police use of body cameras cuts violence and complaints

http://www.theguardian.com/world/2013/nov/04/california-police-body-cameras-cuts-violence-complaints-rialto

"I think we've opened some eyes in the law enforcement world. We've shown the potential," said Tony Farrar, Rialto's police chief. "It's catching on."

Body-worn cameras are not new. Devon and Cornwall police launched a pilot scheme in 2006 and forces in Strathclyde, Hampshire and the Isle of Wight, among others, have also experimented.

But Rialto's randomised controlled study has seized attention because it offers scientific – and encouraging – findings: after cameras were introduced in February 2012, public complaints against officers plunged 88% compared with the previous 12 months. Officers' use of force fell by 60%.

"When you know you're being watched you behave a little better. That's just human nature," said Farrar. "As an officer you act a bit more professional, follow the rules a bit better."

Labels:

LAW,

police,

Sousveillance,

surveillance

Cops Deleted Video, But it Survived on the Cloud

http://thefreethoughtproject.com/cops-beat-man-7-month-pregnant-wife-deleted-video-survived-cloud/

Denver, CO — The Denver police

department has been accused of using excessive force after a video,

which they allegedly deleted, survived on the cloud and was turned into FOX 31.

After the police-on-pregnant woman violence subsided, Frasier says that’s when the Denver police officers became interested in his Samsung Tablet.

After the police-on-pregnant woman violence subsided, Frasier says that’s when the Denver police officers became interested in his Samsung Tablet.

Fraiser told FOX 31

that officers on scene threatened him with arrest, demanded he turn

over all photos and videotape to them and then seized his tablet over

his objections.

“When he took it, I said, ‘Hey! You can’t do that. You need a warrant for that!’ and he said, ‘What program did you take the video with? Where is that?’” Frasier said.

According to Frasier, when he got back his tablet, the video was gone. “I couldn’t believe it. My heart dropped. I know I just shot that video, like it’s not on there now?” Frasier said.

Frasier said it’s “possible” both he and the police officer who looked through his tablet “missed seeing” the clip inside his files.

However, Frasier said he suspects, in reality, the clip was deleted either with intention or by mistake.

When he got back home that evening, Fraiser synced his tablet with his electronic cloud and within a few moments, the video reappeared.

“It was very well known that the video was shot and things were done on the video that shouldn’t be leaked out, that it would be bad for the reputations of the police officers,” Frasier said.

Despite his friends telling him to delete the video for fear that the officers would seek revenge, Fraiser did the courageous thing and submitted it to FOX31, and for this Frasier deserves credit.

“When he took it, I said, ‘Hey! You can’t do that. You need a warrant for that!’ and he said, ‘What program did you take the video with? Where is that?’” Frasier said.

He said police ignored his objections and dug through his personal photos without obtaining a court order.

“The first officer that comes up to ask me about my witness statement brings me to the police car and says we could do this the easy way or we could do this the hard way,” Fraser said. “It was taken as ‘You can either cooperate and give us what we want or we’re going to incarcerate you.’”According to Frasier, when he got back his tablet, the video was gone. “I couldn’t believe it. My heart dropped. I know I just shot that video, like it’s not on there now?” Frasier said.

Frasier said it’s “possible” both he and the police officer who looked through his tablet “missed seeing” the clip inside his files.

However, Frasier said he suspects, in reality, the clip was deleted either with intention or by mistake.

When he got back home that evening, Fraiser synced his tablet with his electronic cloud and within a few moments, the video reappeared.

“It was very well known that the video was shot and things were done on the video that shouldn’t be leaked out, that it would be bad for the reputations of the police officers,” Frasier said.

Despite his friends telling him to delete the video for fear that the officers would seek revenge, Fraiser did the courageous thing and submitted it to FOX31, and for this Frasier deserves credit.

Monday, November 24, 2014

Sony Promotes 4K Resolution for Security Applications

Sony publishes Youtube video showing 4K technology for security cameras:

Another Sony video demos 5-axis optical image stabilization operation in Alpha 7-II DSLR, said to be the first in a full-frame camera.

Another Sony video demos 5-axis optical image stabilization operation in Alpha 7-II DSLR, said to be the first in a full-frame camera.

Sunday, November 23, 2014

Thursday, November 20, 2014

Tuesday, November 18, 2014

Fwd: video book with 3 sensors

---------- Forwarded message ----------

From: Masrui video brochure

Subject: video book with 3 sensors

From: Masrui video brochure

Subject: video book with 3 sensors

5inch video book,each page has a video.see how it works:https://www.youtube.com/watch?v=xIP11P1gJ0s

Monday, November 17, 2014

Sunday, November 16, 2014

The Motley Fool predicts the end of TV.

THE $2.2 TRILLION WAR FOR YOUR LIVING ROOM BEGINS NOW

And the big winners will surprise you. One simple test (explained in this video) shows you how to bag these 3 killer stocks & maximize your profit potential.

http://www.fool.com/video-alert/stock-advisor/sa-cabletv-ext/

Thursday, November 13, 2014

Wednesday, November 12, 2014

Mozilla's new site MozVR virtual reality web site.

Mozilla Research VR Team just launched MozVR.com to foster VR-native sites. The page lets Oculus Rift owners browse technology demos (in a Rift-friendly interface, naturally) that show off what VR can do on the web.

Mozilla is sharing the code, tools and tutorials for its own front end.

http://mozvr.com/

http://mozvr.com/content/2014/11/10/mozvr-launches.html

Labels:

3D,

Mozilla,

Oculus VR,

virtual reality

Tuesday, November 11, 2014

The Work of Dr. Harold Edgerton as presented by Dr. Kim Vandiver

This may seem some what off topic, but if you love photography and history this is about the first flash photos,

Edgerton's work in stop motion photography advanced many areas of science and technology.

Temporal Aliasing of Guitar Strings

This neat little trick is possible because of the rolling shutter of the phone's camera. Note that the 'waves' seen are temporal; the strings are actually vibrating up and down remaining horizontal.

Read this blog post for an excellent explanation: http://danielwalsh.tumblr.com/post/54...

Read this blog post for an excellent explanation: http://danielwalsh.tumblr.com/post/54...

Sunday, November 09, 2014

Thursday, November 06, 2014

Optical super reflector: for specific wavelength the light is perfectly reflected

http://inhabitat.com/mit-creates-perfect-mirror-with-zero-distortion-signaling-breakthrough-for-solar-power/

MIT Creates World's First 'Perfect Mirror' with Zero Distortion, Signaling Breakthrough for Solar Power

Scientists at MIT just announced that they have created the perfect mirror – and it could signal a breakthrough for solar power technology. The team’s “perfect mirror” is capable of reflecting any type of wave — light, sound, or water — with absolutely zero distortion, so it could provide a huge boost to concentrated solar power installations, which use mirrors to focus concentrated beams of sunlight onto a specific area.

Ever feel like what you see in the mirror can’t possibly be accurate? Well, there’s actually some truth to that. All mirrors absorb some of the light waves that hit them or scatter photons around in different directions, resulting in slight distortion. When it comes to solar power installations, these tiny distortions can add up to big efficiency losses.

Marin Soljačić and colleagues from MIT’s photonics and electromagnetics group didn’t set out to create a perfect mirror that could eliminate these inefficiencies – as in many scientific discoveries, they stumbled upon it while investigating something else. ExtremeTech explains:

The team was studying the behavior of a photonic crystal — in this case, a silicon wafer with a nanopatterned layer of silicon nitride on top — that had had holes drilled into it, forming a lattice. These holes are so small that they can only accommodate a single light wave. At most angles, light was partially absorbed by the photonic crystal, as they expected — but with a specific wavelength of red light, at an angle of 35 degrees, the light was perfectly reflected. Every photon that was emitted by the red light source was perfectly bounced back, at exactly the right angle, with no absorption or scattering.

This work is “very significant, because it represents a new kind of mirror which, in principle, has perfect reflectivity,” says A. Douglas Stone, in a press release. Stone is a professor of physics at Yale University who was not involved in this research. The finding, he says, “is surprising because it was believed that photonic crystal surfaces still obeyed the usual laws of refraction and reflection,” but in this case they do not.

The researchers are still trying to figure out why this deviation from known scientific laws took place. However, there is some excitement about what a perfect mirror could mean for various industries. The most obvious application is more powerful and efficient lasers, but concentrated solar power and fiber optics could also be improved.

~ $300 for stereo video on PI.

Dual Camera input PI $215

Camera Boards are $30 each.

http://www.adafruit.com/products/1367

I think this price is mostly driven by OpenCV users and other hobbiests driving up prices to be too high to use internal to all but high ticket products.

Labels:

3D Camera,

image processing,

Raspberry Pi

Magic Leap raises $542 million for Augmented Reality (AR).

http://www.theverge.com/2014/10/21/7026889/magic-leap-google-leads-542-million-investment-in-augmented-reality-startup

http://www.magicleap.com/

Google is leading a huge $542 million round of funding for the secretive startup c, which is said to be working on augmented reality glasses that can create digital objects that appear to exist in the world around you. Though little is known about what Magic Leap is working on, Google is placing a big bet on it: in addition to the funding, Android and Chrome leader Sundar Pichai will join Magic Leap's board, as will Google's corporate development vice-president Don Harrison. The funding is also coming directly from Google itself — not from an investment arm like Google Ventures — all suggesting this is a strategic move to align the two companies and eventually partner when the tech is more mature down the road.

Magic Leap's technology currently takes the shape of something like a pair of glasses, according to The Wall Street Journal. Rather than displaying images on the glasses or projecting them out into the world, Magic Leap's glasses reportedly project their image right onto their wearer's eyes — and apparently to some stunning effects.

"It was incredibly natural and almost jarring — you’re in the room, and there’s a dragon flying around, it’s jaw-dropping and I couldn’t get the smile off of my face," Thomas Tull, CEO of Legendary Pictures, tells the Journal. Legendary also took part in this round of investment, alongside Qualcomm, Kleiner Perkins, Andreessen Horowitz, and Obvious Ventures, among others. Qualcomm's executive chairman, Paul Jacobs, is also joining Magic Leap's board.

The eclectic mix of companies participating in this investment round speak to how broadly Magic Leap sees its potential. Its founder says that he wants the company to become "a creative hub for gamers, game designers, writers, coders, musicians, filmmakers, and artists." Legendary, which makes films including Godzilla and The Dark Knight, is interested in its potential for movies. Google likely sees far more ways to put it to use.

The technology sounds like it could be an obvious companion to Google Glass, but for now the Journal reports that they're not being integrated. Magic Leap declined to commented on what might happen down the road. Nonetheless, the investment in Magic Leap appears to be Google betting on augmented reality as the future of computing, pitting it in a fight against virtual reality competitors. Eventually, it'll likely be facing off against Facebook's Oculus Rift — the biggest name in VR right now, and one that Facebook was willing to pay $2 billion for.

Magic Leap also says that it may "positively transform the process of education."

Magic Leap is run and was founded by Rony Abovitz, who previously founded the medical robotics company Mako Surgical, which was sold for $1.65 billion last year. The Journal reports that Abovitz has a biomedical engineering degree from the University of Miami. He previously made a bizarre, psychedelic TEDx talk involving 2001, green and purple apes, and a punk band. His new company, which has been around since 2011, is headquartered in Florida, so it isn't exactly the typical tech startup out out of Silicon Valley. Abovitz says the location allows Magic Leap to recruit globally. It currently has over 100 employees.

Though Magic Leap's product sounds like a pair of augmented reality glasses, Abovitz and his company dislike the term. Magic Leap brands its effect as "Cinematic Reality," which sounds a bit cooler but doesn't really mean anything just yet. "Those are old terms – virtual reality, augmented reality. They have legacy behind them," Abovitz told the South Florida Business Journal back in February, after closing an initial round of funding. "They are associated with things that didn’t necessarily deliver on a promise or live up to expectations. We have the term cinematic reality because we are disassociated with those things. … When you see this, you will see that this is computing for the next 30 or 40 years. To go farther and deeper than we’re going, you would be changing what it means to be human."

This is all something that Google is eager to view the results of. "We are looking forward to Magic Leap's next stage of growth, and to seeing how it will shape the future of visual computing," Pichai says in a statement. What exactly Google will do with augmented reality is still unknown, but, much like how Google has managed to control a great deal of mobile computing through Android, it's been looking ahead to ensure that it doesn't miss out on the next leap either. It declined to provide further comment on the investment.

Talking to TechCrunch, Abovitz says that Magic Leap should be launching a product for consumers "relatively soon." There's no stated target date for now, though, and it sounds like it still has some development to do.

Labels:

Augmented Reality,

google,

Magic Leap

Stereo Vision and Depth Mapping with Two Raspi Camera Modules

http://hackaday.com/2014/11/03/stereo-vision-and-depth-mapping-with-two-raspi-camera-modules/

The Raspberry Pi has a port for a camera connector, allowing it to capture 1080p video and stream it to a network without having to deal with the craziness of webcams and the improbability of capturing 1080p video over USB. The Raspberry Pi compute module is a little more advanced; it breaks out two camera connectors, theoretically giving the Raspberry Pi stereo vision and depth mapping. [David Barker] put a compute module and two cameras together making this build a reality.

The use of stereo vision for computer vision and robotics research has been around much longer than other methods of depth mapping like a repurposed Kinect, but so far the hardware to do this has been a little hard to come by. You need two cameras, obviously, but the software techniques are well understood in the relevant literature.

[David] connected two cameras to a Pi compute module and implemented three different versions of the software techniques: one in Python and NumPy, running on an 3GHz x86 box, a version in C, running on x86 and the Pi’s ARM core, and another in assembler for the VideoCore on the Pi. Assembly is the way to go here – on the x86 platform, Python could do the parallax computations in 63 seconds, and C could manage it in 56 milliseconds. On the Pi, C took 1 second, and the VideoCore took 90 milliseconds. This translates to a frame rate of about 12FPS on the Pi, more than enough for some very, very interesting robotics work.

There are some better pictures of what this setup can do over on the Raspi blog. We couldn’t find a link to the software that made this possible, so if anyone has a link, drop it in the comments.

The Raspberry Pi has a port for a camera connector, allowing it to capture 1080p video and stream it to a network without having to deal with the craziness of webcams and the improbability of capturing 1080p video over USB. The Raspberry Pi compute module is a little more advanced; it breaks out two camera connectors, theoretically giving the Raspberry Pi stereo vision and depth mapping. [David Barker] put a compute module and two cameras together making this build a reality.

The use of stereo vision for computer vision and robotics research has been around much longer than other methods of depth mapping like a repurposed Kinect, but so far the hardware to do this has been a little hard to come by. You need two cameras, obviously, but the software techniques are well understood in the relevant literature.

[David] connected two cameras to a Pi compute module and implemented three different versions of the software techniques: one in Python and NumPy, running on an 3GHz x86 box, a version in C, running on x86 and the Pi’s ARM core, and another in assembler for the VideoCore on the Pi. Assembly is the way to go here – on the x86 platform, Python could do the parallax computations in 63 seconds, and C could manage it in 56 milliseconds. On the Pi, C took 1 second, and the VideoCore took 90 milliseconds. This translates to a frame rate of about 12FPS on the Pi, more than enough for some very, very interesting robotics work.

There are some better pictures of what this setup can do over on the Raspi blog. We couldn’t find a link to the software that made this possible, so if anyone has a link, drop it in the comments.

Labels:

3D Camera,

autostereoscopic,

depth camera,

Raspberry Pi

Lytro Hits Up The Enterprise With The Introduction Of The Lytro Platform And Dev Kit

https://www.lytro.com/files/LDK_Data_Sheet.pdf

https://www.lytro.com/platform/

From: http://techcrunch.com/2014/11/06/lytro-pivots-to-hit-up-the-enterprise-with-the-introduction-of-the-lytro-platform-and-dev-kit/

Lytro has been working for three years to build a brand new type of camera with light field technology, and while the tech itself is quite incredible, transforming that into a viable business has proven difficult.

Until now, the company has been selling special cameras, the original Lytro and the newer,photographer-friendly Illum. It’s a difficult business that is in fast flux, given that so many entry-level photographers now have a camera in their smartphone and more intensive photographers want a proper DSLR.

And so, Lytro is adding a new revenue stream to its business with the launch of the Lytro Development Kit. For now, it’s a software development kit that comes with an API for integrating light field technology into applications in a number of imaging fields, including holography, microscopy, architecture, and security.

Lytro’s light field sensor takes into account the direction that light is traveling relative to the shot, rather than capture light on a single plane. This, paired with Lytro’s software, allows for image refocusing post-shot, among other dimension-based features. With the LDK, Lytro is looking to open up that functionality to other field and businesses, with a revenue stream coming from the enterprise side.

Alongside access to the API and the Lytro processing engine, the company will also be working alongside partners to develop custom devices and photography hardware to accomplish their specific, industry-based goals.

With the launch, Lytro has announced four major partnerships with organizations already on the platform, including NASA’s Jet Propulsion Laboratory, a medical devices startup called General Sensing, the Army Night Vision and Electronic Sensors Directorate, and an unnamed “industrial partner” applying the tech to work with nuclear reactors.

Pricing starts at $20,000 for access to the platform. You can learn more here.

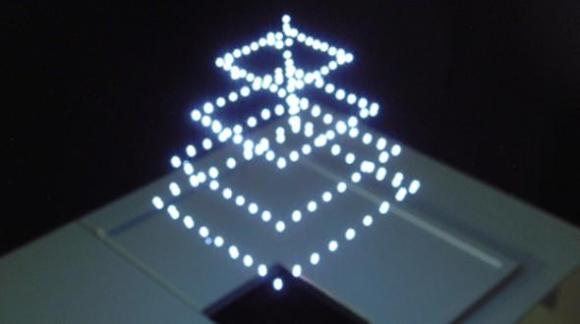

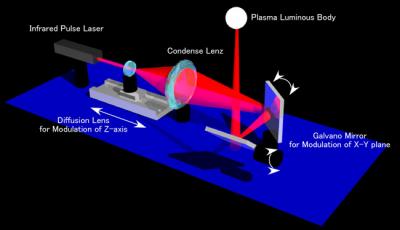

How a Real 3D Display Works

From: http://hackaday.com/2014/11/06/how-a-real-3d-display-works/

There’s a new display technique that’s making the blog rounds, and like anything that seems like its torn from [George Lucas]‘ cutting room floor, it’s getting a lot of attention. It’s a device that can display voxels in midair, forming low-resolution three-dimensional patterns without any screen, any fog machine, or any reflective medium. It’s really the closest thing to the projectors in a holodeck we’ve seen yet, leading a few people to ask how it’s done.

This isn’t the first time we’ve seen something like this. A few years ago. a similar 3D display technology was demonstrated that used a green laser to display tens of thousands of voxels in a display medium. The same company used this technology to draw white voxels in air, without a smoke machine or anything else for the laser beam to reflect off of. We couldn’t grasp how this worked at the time, but with a little bit of research we can find the relevant documentation.

A system like this was first published in 2006, built upon earlier work that only displayed pixels on a 2D plane. The device worked by taking an infrared Nd:YAG laser, and focusing the beam to an extremely small point. At that point, the atmosphere heats up enough to turn into plasma and turns into a bright, if temporary, point of light. With the laser pulsing several hundred times a second, a picture can be built up with these small plasma bursts.

Moving a ball of plasma around in 2D space is rather easy; all you need are a few mirrors. To get a third dimension to projected 3D images, a lens mounted on a linear rail moves back and forth changing the focal length of the optics setup. It’s an extremely impressive optical setup, but simple enough to get the jist of.

Having a device that projects images with balls of plasma leads to another question: how safe is this thing? There’s no mention of how powerful the laser used in this device is, but in every picture of this projector, people are wearing goggles. In the videos – one is available below – there is something that is obviously missing once you notice it: sound. This projector is creating tiny balls of expanding air hundreds of times per second. We don’t know what it sounds like – or if you can hear it at all – but a constant buzz would limit its application as an advertising medium.

As with any state-of-the-art project where we kinda know how it works, there’s a good chance someone with experience in optics could put something like this together. A normal green laser pointer in a water medium would be much safer than an IR YAG laser, but other than that the door is wide open for a replication of this project.

Labels:

3D Display,

Hologram,

Holographic

Omnivision Automotive Image Sensor Demo

LowLightVideos publishes a video demo of Omnivision OV10640 OmniBSI 4.2um pixel sensor for automotive applications. The notable points in the video:

0:10 Driving down the road there are clouds in the night sky that can be seen, yet oncoming headlights are not blinding.

0:18 Driving in the underground during the day provides a fairly good image, though the trees outdoors are a bit washed out.

0:55 Driving into the Sun does not oversaturate the Sensor. Next, a turn into a dark underground which quickly and smoothly brightens up as we enter.

1:40 All those Headlights create a tiny bit of glare, but the clouds look pretty good in the night sky. Very little noise visible.

4:00 At night the Streetlights never get too bright and the glare is never too great, yet the sky has visible clouds and very little noise.

Labels:

camera,

HDR,

OmniVision,

sensor

Peter Thiel Invests in PixelPlus

Korea Economic Daily reports that Peter Thiel, one of the legendary Silicon Valley investors and a former PayPal CEO, has acquired 3% stake in Korean image sensor maker PixelPlus. Reportedly, he paid 10 billion won (about $9.3M) , putting the company valuation at about 330 billion won, or $310M. Analysts expect the company value to jump to 500 billion won next year when it is to make IPO on the KOSDAQ. Pixelplus founder and president Lee Seo-gyu is said to own 24.4% stake in the company.

Pixelplus posted 149.4 billion won in sales revenue and 46.5 billion won in operating profit last year.

This Chinese Company Wants to Be the Next Oculus Rift

http://gadgets.ndtv.com/laptops/news/this-chinese-company-wants-to-be-the-next-oculus-rift-528008

As a part of our weekly series on Kickstarter and other crowdfunding websites, we try and find the most exciting new projects online. The Oculus Rift and the Pebble Smartwatch proved that the most interesting and innovative new products don't have to emerge from giant companies anymore, and we're watching out for the next big thing.

A Chinese company called ANTVR believes that will be its ANTVR Kit, a Virtual Reality kit, which they say is even better than the OculusRift. What's more, ANTVR claims its headset is compatible with different gaming platforms, and not just the PC, unlike the Rift. To bring the ANTVR Kit to production, the company has taken to Kickstarter.

Normally, when a company makes claims like that, you should start to feel skeptical, but the Kickstarter video includes testimony from people like Jan Goetgeluk, CEO at Virtuix, whose Omni treadmill is designed to work with the Oculus Rift. ANTVR also quotes David Helgason, the CEO and founder of Unity Technologies, as saying that "it's a really really great experience."

With some experts having tried out prototypes, this Kickstarter starts to sound like something which is real and exciting. At the time of writing, ANTVR has raised $171,991 out of a total target of $200,000, with 33 days left to go.

The headset looks similar to Oculus Rift, but we were initially sceptical about the idea that it could be used across platforms. However, the company claims it can do that by using the headset as just a head-mounted display, and not for VR. They note on the Kickstarter page that if the game is only 2D, then you can wear the headset to play as a 2D game on a large virtual screen. This means you can hook it up with consoles that have not been developed with its use in mind. Sony already has a somewhat similar product in the market, the Sony HMZ-T3Q.

But what's most interesting about the ANTVR might be the controller peripheral that the company has developed. This is pretty unique, and while it's shaped like a gun for use in shooter games, you can unfold it to reveal a full controller, or a steering wheel as well, which is pretty interesting. Since the HDMI cable to your computer will run from the front of this controller though, it might lead for some risky wire tangles, as the wire would be waved about as you play. There is a $200 wireless accessory available, though given how big a problem latency is already supposed to be, that sounds like a really bad idea.

The project is also pricing things similarly to the Oculus Rift Kickstarter, with a headset and controller coming for a $300 pledge. Until the commercial release of the Oculus Rift or Sony's Project Morpheus, this seems to be the standard benchmark for VR devices, though that will probably change once those other devices are widely available.

For now though, you can check out this video of the ANTVR in action below:

Labels:

ANTVR,

Oculus VR,

virtual reality

Oculus VR Headset's Consumer Version Is 'Very Close': CEO

http://gadgets.ndtv.com/wearables/news/oculus-vr-headsets-consumer-version-is-very-close-ceo-616607

Oculus VR, which is anticipated to launch the consumer version of the Oculus Rift, could launch the device soon, as it is "months, not years away", according to CEO, Brendan Iribe.

Oculus VR, which is anticipated to launch the consumer version of the Oculus Rift, could launch the device soon, as it is "months, not years away", according to CEO, Brendan Iribe.

Speaking at Web Summit 2014 in Dublin, Brendan Iribe, said (via The Next Web), "We're all hungry for it to happen. We're getting very close. It's months, not years away, but many months."

According to Iribe, the company has grown after the acquisition by Facebook in March, and now with a separate R&D division, the number of employees has increased from 75 to over 200.

Iribe also said that company's latest Crescent Bay prototype is "largely finalized for a consumer product", which was announced in September. Crescent Bay is an upgraded prototype of the Oculus Rift virtual reality headset that has higher resolution and built-in audio.

While the exact timing of the launch of the consumer version of the Rift virtual reality headset was not shared by Iribe, one thing we know from previous coverage is its approximate price.

In September, Oculus VR Founder, Palmer Luckey, in an interview said that the price range of the consumer version of the Rift could be between $200 and $400, which will depend on a number of factors.

(Also see: Five Essential Oculus Rift Gaming Experiences)

"We want to stay in that $200-$400 price range, [...] That could slide in either direction depending on scale, pre-orders, the components we end up using, business negotiations," said in an interview to Eurogamer.

Subscribe to:

Posts (Atom)