The Smallest VR Headset - about Half the Size and Weight of Traditional devices - offers Cinema-like Image Quality

Kopin and Goertek are unveiling its groundbreaking VR headset reference design codenamed Elf VR at AWE. The new design will eliminate the barriers that have long stood in the way of delivering an effective VR experience and overcomes limitations related to uncomfortably bulky and heavy headset designs, low resolution and sluggish framerates and the annoying screen door effect. The new Elf reference design features Kopin’s “LightningTM” OLED microdisplay panel offering an incredible 2048 x 2048 resolution in each eye - more than three-times the resolution of Oculus Rift or HTC Vive, and at an unbelievable pixel density of 2,940 pixels per inch, five-times more than standard displays.

In addition, the panel runs at 120 Hz refresh rate, 33% faster than what traditional HMDs offer - for reduced motion blur, latency and flicker. As a result, nausea and fatigue are eliminated. Because Kopin’s panel is OLED-based and has integrated driver circuits, it requires much less power, battery life can be extended, and heat output is substantially reduced.

FOR IMMEDIATE RELEASE

For more information contact:

KOPIN AND GOERTEK LAUNCH ERA OF SEAMLESS VIRTUAL REALITY WITH CUTTING-EDGE NEW REFERENCE DESIGN

The Smallest VR Headset - about Half the Size and Weight of Traditional devices - offers Film-like Images

SANTA

CLARA, CA – June 1st, 2017 - Kopin Corporation (NASDAQ:KOPN) (“Kopin”)

today kicked off the era of of Seamless Virtual Reality. On stage at

Augmented World Expo, the Company showcased a groundbreaking reference

design, codenamed Elf VR, for a new Head-Mounted Display created with

its partner Goertek Inc. (“Goertek”), the world leader in VR headset

manufacturing.

When brought to market, the new design will

eliminate the barriers that have long stood in the way of delivering an

effective VR experience. In fact, traditional attempts at VR headsets

have been uncomfortably bulky and heavy, while low resolution and

sluggish framerates caused screen door effect and nausea, making them

usable for only tens of minutes at a time at best.

Kopin’s Lightning Display – A new approach to VR

To

resolve these issues, Kopin’s engineers utilized its three decades of

display experience to create “LightningTM” OLED microdisplay panel,

putting an end to the dreaded screen-door effect, with 2048 x 2048

resolution in each eye, more than three times the resolution of Oculus

Rift or HTC Vive, and at an unbelievable pixel density of 2,940 pixels

per inch, five times more than standard displays.

Kopin first

showcased its Lightning display at CES 2017, to overwhelming acclaim and

a coveted CES Innovation Award. PC Magazine wrote that “the most

advanced display I saw came from Kopin” and Anandtech said “Seeing is

believing…I quite literally could not see anything resembling aliasing

on the display even with a 10x loupe to try and look more closely.”

In

addition, the panel runs at 120 Hz refresh rate, 33% faster than what

traditional HMDs offer - for reduced motion blur, latency and flicker.

As a result, nausea and fatigue are eliminated. Because Kopin’s panel is

OLED-based and has integrated driver circuits, it requires much less

power, battery life can be extended, and heat output is substantially

reduced.

“It is now time for us to move beyond our conventional

expectation of what virtual reality can be and strive for more,”

explained Kopin founder and CEO John Fan. “Great progress has been made

this year, although challenges remain. This reference design, created

with our partner Goertek, is a significant achievement. It is much

lighter and fully 40% smaller than standard solutions, so that it can be

worn for long periods without discomfort. At the same time, our OLED

microdisplay panel achieves such high resolution and frame rate that it

deliver a VR experience that truly approaches reality for markets

including gaming, pro applications or film.”

In addition to the

game-changing new design, Kopin previously announced an alliance with

BOE Technology Group Co. Ltd. (BOE) and Yunan OLiGHTEK Opto-Electronic

Technology Co.,Ltd. for OLED display manufacturing. As part of that

alliance, all parties will contribute up to $150 million to establish a

high-volume, state of the art facility to manufacture OLED

micro-displays to support the growing AR and VR markets. The new

facility, which would be the world’s largest OLED-on-silicon

manufacturing center, will be managed by BOE and is expected to be built

in Kunming, Yunnan Province, China over the next two years. BOE is the

world leader in display panels for mobile phone and tablets.

Technical specs:

- Elf

VR is equipped with Kopin "Lightning" OLED microdisplay panels, which

feature 2048 x 2048 resolution of each panel, to provide binocular 4K

image resolution at 120Hz refresh rate. Combined with both 4K Ultra-High

image resolution and 120Hz refresh-rate, Elf VR provides very smooth

images with excellent quality, and effectively reduces the sense of

vertigo.

- he Microdisplay panels are manufactured

with advanced ultra-precise processing techniques. Its pixel density was

increased by approximately 400% compared to the conventional TFT-LCD,

OLED and AMOLED display, and the screen size can be reduced to

approximately 1/5 at similar pixel resolution level.

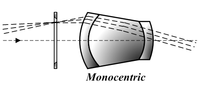

- Elf

VR also adopts an advanced optical solution with a compact Multi-Lens

design, which enabled it to reduce the thickness of its optical module

by around 60%, and to reduce the total weight of VR HMD by around 50% as

well, which can significantly improve the user experiences for longtime

wearing.

- The reference design supports two novel

optics solutions – 70 degrees FOV for film-like beauty or 100 degrees

FOV for deep immersion.

Thank you for contacting J-Tech Digital. HDbitT is sort of proprietary. It was built on an already known protocol (TCP/IP), but does not work with other TCP/IP HDMI extenders. It also uses multicast (if your network switch does not support multicast, your switch will treat the traffic as broadcast). If you would like to know any other information please let me know.

J-Tech Digital Support Team

12855 Capricorn ST Stafford, TX 77477

Tel: 832-886-4042

Email: support@jtechdigital.com