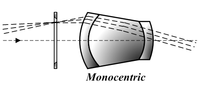

The operative word here is Monocentric

Monocentric

A Wide-Field-of-View Monocentric Light Field Camera

Donald G. Dansereau, Glenn Schuster , Joseph Ford , and Gordon Wetzstein Stanford University, Department of Electrical Engineering

http://www.computationalimaging.org/wp-content/uploads/2017/04/LFMonocentric.pdfAbstract Light field (LF) capture and processing are important in an expanding range of computer vision applications, offering rich textural and depth information and simplification of conventionally complex tasks. Although LF cameras are commercially available, no existing device offers wide field-of-view (FOV) imaging. This is due in part to the limitations of fisheye lenses, for which a fundamentally constrained entrance pupil diameter severely limits depth sensitivity. In this work we describe a novel, compact optical design that couples a monocentric lens with multiple sensors using microlens arrays, allowing LF capture with an unprecedented FOV. Leveraging capabilities of the LF representation, we propose a novel method for efficiently coupling the spherical lens and planar sensors, replacing expensive and bulky fiber bundles. We construct a single sensor LF camera prototype, rotating the sensor relative to a fixed main lens to emulate a wide-FOV multi-sensor scenario. Finally, we describe a processing tool chain, including a convenient spherical LF parameterization, and demonstrate depth estimation and post-capture refocus for indoor and outdoor panoramas with 15 × 15 × 1600 × 200 pixels (72 MPix) and a 138° FOV.

---------

Designing a 4D camera for robots

Stanford engineers have developed a 4D camera with an extra-wide field of view. They believe this camera can be better than current options for close-up robotic vision and augmented reality.

Light field camera, or standard plenoptic camera, works by capturing information about the light field emanating from the scene. It measures the intensity of the light in the scene and also the direction that the light rays travel. Traditional photography only captures the light intensity.

The researchers proudly call their design to be the “first-ever single-lens, wide field of view, light field camera.” The camera uses the information it has gathered about the light at the scene in combination with the 2D image to create the 4D image.

This means the photo can be refocused after the image has been captured. The researchers cleverly use the analogy of the difference between looking out a window and through a peephole to describe the difference between the traditional photography and the new technology. They say, ““A 2D photo is like a peephole because you can’t move your head around to gain more information about depth, translucency or light scattering’. Looking through a window, you can move and, as a result, identify features like shape, transparency and shininess.”

The 4D camera’s unique qualities make it perfect for use with robots. For instance, images captured by a search and rescue robot could be zoomed in and refocused to provide important information to base control. The imagery produced by the 4D camera could also have application in augmented reality as the information rich images could help with better quality rendering.

The 4D camera’s unique qualities make it perfect for use with robots. For instance, images captured by a search and rescue robot could be zoomed in and refocused to provide important information to base control. The imagery produced by the 4D camera could also have application in augmented reality as the information rich images could help with better quality rendering.

The 4D camera is still at a proof-of-concept stage, and too big for any of the future possible applications. But now the technology is at a working stage, smaller and lighter versions can be developed. The researchers explain the motivation for creating a camera specifically for robots. Donald Dansereau, a postdoctoral fellow in electrical engineering explains, “We want to consider what would be the right camera for a robot that drives or delivers packages by air. We’re great at making cameras for humans but do robots need to see the way humans do? Probably not.”

The research will be presented at the computer vision conference, CVPR 2017 on July 23.

First images from the world's only single-lens wide-FOV light field camera.

From CVPR 2017 paper "A Wide-Field-of-View Monocentric Light Field Camera",

http://dgd.vision/Projects/LFMonocentric/

This parallax pan scrolls through a 138-degree, 72-MPix light field captured using our optical prototype. Shifting the virtual camera position over a circular trajectory during the pan reveals the parallax information captured by the LF.

There is no post-processing or alignment between fields, this is the raw light field as measured by the camera.

Other related work:

http://spie.org/newsroom/6666-panoramic-full-frame-imaging-with-monocentric-lenses-and-curved-fiber-bundles

Monocentric lens-based multi-scale optical systems and methods of use US 9256056 B2

No comments:

Post a Comment